Or try one of the following: 詹姆斯.com, adult swim, Afterdawn, Ajaxian, Andy Budd, Ask a Ninja, AtomEnabled.org, BBC News, BBC Arabic, BBC China, BBC Russia, Brent Simmons, Channel Frederator, CNN, Digg, Diggnation, Flickr, Google News, Google Video, Harvard Law, Hebrew Language, InfoWorld, iTunes, Japanese Language, Korean Language, mir.aculo.us, Movie Trailers, Newspond, Nick Bradbury, OK/Cancel, OS News, Phil Ringnalda, Photoshop Videocast, reddit, Romanian Language, Russian Language, Ryan Parman, Traditional Chinese Language, Technorati, Tim Bray, TUAW, TVgasm, UNEASYsilence, Web 2.0 Show, Windows Vista Blog, XKCD, Yahoo! News, You Tube, Zeldman

WebAssembly proposal touted to improve Wasm web integration | InfoWorld

Technology insight for the enterpriseWebAssembly proposal touted to improve Wasm web integration 5 Mar 2026, 12:11 am

The WebAssembly Component Model, an architecture for building interoperable WebAssembly libraries, applications, and environments, is being touted as a way to elevate Wasm beyond its current status as “a second-class language for the web.”

The WebAssembly Component Model, in development since 2021, would provide critical capabilities for WebAssembly, according to a February 26 blog post from Mozilla software engineer Ryan Hunt. These capabilities include:

- A standardized self-contained executable artifact

- Support by multiple languages and toolchains

- Handling of loading and linking of WebAssembly code

- Support for Web API usage

A WebAssembly component defines a high-level API that is implemented with a bundle of low-level Wasm code, Hunt explained. “As it stands today, we think that WebAssembly Components would be a step in the right direction for the web,” he wrote.

Mozilla is working with the WebAssembly Community Group to design the WebAssembly Component Model, and Google is evaluating the model, according to Hunt. In his post, Hunt argued that despite WebAssembly adding capabilities such as shared memory, exception handling, and bulk memory instructions since its introduction 2017, it has been held back from wider web adoption. “There are multiple reasons for this, but the core issue is that WebAssembly is a second-class language on the web,” Hunt wrote. “For all of the new language features, WebAssembly is still not integrated with the web platform as tightly as it should be.”

WebAssembly has been positioned as a binary format to boost web application performance; it also has served as a compilation target for other languages. But Hunt argued that WebAssembly’s loose integration with the web leads to a poorer developer experience, so that developers only use it when they absolutely need it.

“Oftentimes, JavaScript is simpler and good enough,“ said Hunt. “This means [Wasm] users tend to be large companies with enough resources to justify the investment, which then limits the benefits of WebAssembly to only a small subset of the larger web community,” he wrote. JavaScript has advantages in loading code and using web APIs, which make it a first-class language on the web, wrote Hunt, while WebAssembly is not. Without the component model, he argued, WebAssembly is too complicated for web usage. He added that standard compilers do not produce WebAssembly that works on the web.

An ode to craftsmanship in software development 4 Mar 2026, 9:00 am

I was talking about the astonishing rise of AI-assisted coding with my friend Josh recently, and he said he was going to miss the craftsmanship aspect of actually writing code.

Now, I’m a big believer that software is a craft, not an engineering endeavor. The term “engineering” implies a certain amount of certainty and precision that can never be replicated in software. I’ve never been a big fan of the term “computer science” either, because again “science” implies the scientific method and a certain amount of repeatability. Part of what makes software development so hard is that no two projects are even remotely alike, and if you tried to repeat a project, you’d get a completely different result.

Some like to argue that writing software is like painting, but I’ve never followed that route either. Artists are usually free-flowing and unbound, restrained only by convention—and many artists feel utterly unrestrained even by convention.

Software always seems to be somewhere in between. The consensus among many developers—Uncle Bob Martin among them—is that writing software is a craft, more akin to carpentry or watchmaking. All three practices are somewhat limited by the physical properties of the materials. They require precision to get good results, and this precision requires care, commitment, and expertise.

So I get Josh’s feelings of loss about no longer being able to wield the craft of software development.

The conversation got a touch more interesting, though, when I said, “Well, think of it this way: You are now a senior craftsman with a tireless, eager, and constantly learning apprentice who is completely willing to do all the work in the shop without a single complaint.”

And that is quite a thought. Sure, we senior craftspeople celebrate writing elegant code, constructing beautiful class hierarchies, and designing working software. But I will admit that a lot of the work is tedious, and having an unflagging coder grinding out the “dirty work” is a really nice thing.

But it can become more than that. Your coding apprentice can build, at your direction, pretty much anything now. The task becomes more like conducting an orchestra than playing in it. Not all members of the orchestra want to conduct, but given that is where things are headed, I think we all need to consider it at least. The results are the same. You can dabble as much in code as you want. You can check every line, merely review the overall architecture, or, if you are like me, you can be quite content with moving past the grind of actually writing code to orchestrating the process and ensuring the proper final result.

Nevertheless, I feel Josh’s angst. I will miss the satisfaction of writing the lovely procedure that does one thing cleanly and quickly, of creating the single object that does everything you need it to do and nothing more, of getting things working just right. All of that is gone, as are the conductor’s days of playing a spotlight solo. It’s hard, but it’s where we are.

It’s not unlike choosing to become a manager—you leave behind your coding days for a different role. Sure, you miss the good old days of programming every day, but the new challenges are valuable and satisfying.

There are folks out there who write carefully crafted assembly. And in a few years, there will be folks doing the same thing with Java and C# and Pascal. Coding will soon become a quirky pastime, written only by eccentric old developers who relish the craft of software development.

It’s only been a few months, but I already view Claude Code as nothing more than an elaborate compiler, and the code it produces (in whatever language) as assembly code.

The right way to architect modern web applications 4 Mar 2026, 9:00 am

For decades, web architecture has followed a familiar and frankly exhausting pattern. A dominant approach emerges, gains near-universal adoption, reveals its cracks under real-world scale, and is eventually replaced by a new “best practice” that promises to fix everything the last one broke.

We saw it in the early 2000s, when server-rendered, monolithic applications were the default. We saw it again in the late 2000s and early 2010s, when the industry pushed aggressively toward rich client-side applications. And we saw it most clearly during the rise of single-page applications, which promised desktop-like interactivity in the browser but often delivered something else entirely: multi-megabyte JavaScript bundles, blank loading screens, and years of SEO workarounds just to make pages discoverable.

Today, server-side rendering is once again in vogue. Are teams turning back to the server because client-side architectures have hit a wall? Not exactly.

Both server-side rendering and client-side approaches are as compelling today as they ever were. What’s different now is not the tools, or their viability, but the systems we’re building and the expectations we place on them.

The upshot? There is no single “right” model for building web applications anymore. Let me explain why.

From websites to distributed systems

Modern web applications are no longer just “sites.” They are long-lived, highly interactive systems that span multiple runtimes, global content delivery networks, edge caches, background workers, and increasingly complex data pipelines. They are expected to load instantly, remain responsive under poor network conditions, and degrade gracefully when something goes wrong.

In that environment, architectural dogmatism quickly becomes a liability. Absolutes like “everything should be server-rendered” or “all state belongs in the browser” sound decisive, but they rarely survive contact with production systems.

The reality is messier. And that’s not a failure—it’s a reflection of how much the web has grown up.

The problem with architectural absolutes

Strong opinions are appealing, especially at scale. They reduce decision fatigue. They make onboarding easier. Declaring “we only build SPAs” or “we are an SSR-first organization” feels like a strategy because it removes ambiguity.

The problem is that real applications don’t cooperate.

A single modern SaaS platform often contains wildly different workloads. Public-facing landing pages and documentation demand fast first contentful paint, predictable SEO behavior, and aggressive caching. Authenticated dashboards, on the other hand, may involve real-time data, complex client-side interactions, and long-lived state where a server round trip for every UI change would be unacceptable.

Trying to force a single rendering strategy across all of that introduces what many teams eventually recognize as architectural friction. Exceptions creep in. “Just this once” logic appears. Over time, the architecture becomes harder to understand than if those trade-offs had been acknowledged explicitly from the start.

Not a return to the past, but an expansion

It’s tempting to describe the current interest in server-side rendering as a return to fundamentals. In practice, that comparison breaks down quickly.

Classic server-rendered applications operated on short request life cycles. The server generated HTML, sent it to the browser, and largely forgot about the user until the next request arrived. Interactivity meant full page reloads, and state lived almost entirely on the server.

Modern server-rendered applications behave very differently. The initial HTML is often just a starting point. It is “hydrated,” enhanced, and kept alive by client-side logic that takes over after the first render. The server no longer owns the full interaction loop, but it hasn’t disappeared either.

Even ecosystems that never abandoned server rendering, PHP being the most obvious example, continued to thrive because they solved certain problems well; they provided predictable execution models, straightforward deployments, and proximity to data. What changed was not their relevance, but the expectation that they now coexist with richer client-side behavior rather than compete with it.

This isn’t a rollback. It’s an expansion of the architectural map.

Constraint-driven architecture

Once teams step away from ideology, the conversation becomes more productive. The question shifts from “What is the best model?” to “What are we optimizing for right now?”

Data volatility matters. Content that changes once a week behaves very differently from real-time, personalized data streams. Performance budgets matter too. In an e-commerce flow, a 100-millisecond delay can translate directly into lost revenue. In an internal admin tool, the same delay may be irrelevant.

Operational reality plays a role as well. Some teams can comfortably run and observe a fleet of SSR servers. Others are better served by static-first or serverless approaches simply because that’s what their headcount and expertise can support.

These pressures rarely apply uniformly across an application. Systems with strict uptime requirements may even choose to duplicate logic across layers to reduce coupling and failure impact, for example, enforcing critical validation rules both at the API boundary and again in the client, so that a single back-end failure doesn’t completely block user workflows.

Hybrid architectures stop being a compromise in this context. They become a way to make trade-offs explicit rather than accidental.

When the server takes on more UI responsibility

One of the more subtle shifts in recent years is how much responsibility the server takes on before the browser becomes interactive.

This goes well beyond SEO or faster first paint. Servers live in predictable environments. They have stable CPU resources and direct access to databases and internal services. Browsers, by contrast, run on everything from high-end desktops to underpowered mobile devices on unreliable networks.

Increasingly, teams are using the server to do the heavy lifting. Instead of sending fragmented data to the client and asking the browser to assemble it, the server prepares UI-ready view models. It aggregates data, resolves permissions, and shapes state in a way that would be expensive or duplicative to do repeatedly on the client.

By the time the payload reaches the browser, the client’s job is narrower: activate and enhance. This reduces the time to interactive and shrinks the amount of transformation logic shipped to users.

This naturally leads to incremental and selective hydration. Hydration is no longer an all-or-nothing step. Critical, above-the-fold elements become interactive first. Less frequently used components may not hydrate until the user engages with them.

Performance optimization, in this model, becomes localized rather than global. Teams improve specific views or workflows without restructuring the entire application. Rendering becomes a staged process, not a binary choice.

Debuggability changes the architecture conversation

As applications grow more distributed, performance is no longer the only concern that shapes architecture. Debuggability increasingly matters just as much.

In simpler systems, failures were easier to trace. Rendering happened in one place. Logs told a clear story. In modern applications, rendering can be split across build pipelines, edge runtimes, and long-lived client sessions. Data can be fetched, cached, transformed, and rehydrated at different moments in time.

When something breaks, the hardest part is often figuring out where it broke.

This is where staged architectures show a real advantage. When rendering responsibilities are explicit, failures tend to be more localized. A malformed initial render points to the server layer. A UI that looks fine but fails on interaction suggests a hydration or client-side state issue. At an architectural level, this mirrors the single responsibility principle applied beyond individual classes: Each stage has a clear reason to change and a clear place to investigate when something goes wrong.

Architectures that try to hide this complexity behind “automatic” abstractions often make debugging harder, not easier. Engineers end up reverse engineering framework behavior instead of reasoning about system design. It’s no surprise that many senior teams now prefer systems that are explicit, even boring, over ones that feel magical but opaque.

Frameworks as enablers, not answers

This shift is visible across the ecosystem. Angular is a good example. Once seen as the archetype of heavy client-side development, it has steadily embraced server-side rendering, fine-grained hydration, and signals. Importantly, it doesn’t prescribe a single way to use them.

That pattern repeats elsewhere. Modern frameworks are no longer trying to win an ideological war. They are providing knobs and dials, ways to control when work happens, where state lives, and how rendering unfolds over time.

The competition is no longer about purity. It’s about flexibility under real-world constraints. Pure architectures tend to look great in greenfield projects. They age less gracefully.

As requirements evolve, and they always do, strict models accumulate exceptions. What began as a clean set of rules turns into a collection of caveats. Architectures that acknowledge complexity early tend to be more resilient. Clear boundaries make it possible to evolve one part of the system without destabilizing everything else.

Rigor in 2026 is not about enforcing sameness. It’s about enforcing clarity: knowing where code runs, why it runs there, and how failures propagate.

Embracing the spectrum

The idea of a single “right” way to build for the web is finally losing its grip. And that’s a good thing.

Server-side rendering and client-side applications were never enemies. They were tools that solved different problems at different moments in time. The web has matured enough to admit that most architectural questions don’t have universal answers.

The most successful teams today aren’t chasing trends. They understand their constraints, respect their performance budgets, and treat rendering as a spectrum rather than a switch. The web didn’t grow up by picking a side. It grew up by embracing nuance, and the architectures that will last are the ones that do the same.

What I learned using Claude Sonnet to migrate Python to Rust 4 Mar 2026, 9:00 am

If there’s one universal experience with AI-powered code development tools, it’s how they feel like magic until they don’t.

One moment, you’re watching an AI agent slurp up your codebase and deliver a remarkably sharp analysis of its architecture and design choices. And the next, it’s spamming the console with “CoreCoreCoreCore” until the scroll-back buffer fills up and you’ve run out of tokens.

As AI-powered coding and development tools advance, we’ve formed a clearer sense of what they do well, do badly, and in some cases, should not do at all. Theoretically, they empower developers by doing the kind of work that would otherwise be tedious or overwhelming: generating tests, refactoring, creating examples for documentation, etc. In practice, such “empowerment” often comes at a cost. What the AI makes easier up front only makes things harder later on.

One golden-dream scenario I’ve mulled over is using AI tools to port code from one language to another. If I’d spun up a Python project, then decided later to migrate it to Rust, would an AI agent put me in the driver’s seat faster? Or could it at least ride shotgun with me?

A question like that deserves a hands-on answer—yes, even if I ended up burning my fingers doing it. So, here’s what happened when I tried using Claude Code to port one of my Python projects to Rust.

Project setup and why I chose Rust

I decided to try porting a Python-based blogging system I wrote, a server-side app that generates static HTML and provides a WordPress-like interface. I chose it, in part, because it has relatively few features: a per-blog templating system, categories and tags, and an interface that lets you write posts in HTML, with a rich-text editor, or via plaintext Markdown.

I made sure all the features—the templating system, the ORM, the web framework—had one or more parallels in the Rust ecosystem. The project also included some JavaScript front-end code, so I could potentially use it to test how well the tooling dealt with a mixed codebase.

I chose Rust as the porting target largely because Rust’s correctness and safety guarantees come at compile time, not runtime. I reasoned the AI ought to benefit from useful feedback from the compiler along the way, and that would and make the porting process more fruitful. (Hope springs eternal, right?)

For the AI, I initially chose Claude Sonnet 4.5, then had to upgrade to Claude Sonnet 4.6 when the older version was abruptly discontinued. I also used Google’s own Antigravity IDE, which I’ve previously reviewed.

The first directive

I made a copy of my Python codebase directory, opened Antigravity there, and started with a simple directive:

This directory contains a Python project, a blogging system. Examine the code and devise a plan for how to migrate this project to Rust, using native Rust libraries but preserving the same functionality.

After chewing on the code, Claude recommended the following components as part of the plan to “transition to a modern, high-performance Rust stack”:

- Axum for the web layer.

- SeaORM for database interactions.

- Tera for templating.

- Tokio for asynchronous task handling (replacing Python’s multiprocessing).

Claude didn’t have any obvious difficulties finding appropriate substitutes for the Python libraries, or with mapping operations from one language to the other—such as using tokio for async to replace Python multiprocessing. I suspect part of what made this part relatively easy was the design of my original program, which didn’t rely on tricky Python features like dynamic imports. It also helped that Claude proceeded by analyzing and re-implementing program behaviors rather than individual interfaces or functions. (This approach also had some limitations, which I’ll discuss below.)

I looked over the generated plan and noted it didn’t create any placeholder data for a newly initialized database—a sample user, a blog with a sample post in it, etc. Claude added this in and I confirmed it worked by restarting the program and inspecting the created database. So far, so good.

A few missing pieces

The next stage involved discovering just how much Claude didn’t do. Despite discovering and building my app’s core page-rendering logic, it didn’t create any of the user-facing infrastructure—the admin panel for logging in and editing and managing posts. Admittedly, though, my instructions said nothing about that interface. Should I blame Claude for not being diligent enough to look, or blame myself for not being explicit in my original instructions? Either way, I pointed out the omission and got back a plan for doing that work:

I'm now addressing the missing Admin UI by analyzing the original Bottle templates and planning their migration to Tera, including the login screen and main dashboard.

Note: Bottle was the web framework I used for my Python project. This formed a test of its own: How well would Claude cope with migrating from a lesser-known library? This by itself turned out not to be a significant issue, but far bigger problems lurked elsewhere.

It was at this point where the bulk of my back-and-forth with Claude began. For developers already working with AI tools, this cycle will be familiar: the prompt—>generate—>test—>re-prompt loop. Basically, I’d have Claude implement some missing feature (in this case, each element of the admin UI), fire up the program to test it for myself, run into various errors or omissions, and cadge Claude into fixing them.

The first problem I found in the admin UI was an un-caught runtime error from the web templates, something not addressed by Rust’s compile-time checking. Then the login page for the admin panel came up blank. Then, after the login page worked, it led me to a placeholder page reading “Login logic not yet implemented.” Then, the logic for accepting usernames and passwords proved faulty. And so on, all through each of the original application components.

Major and minor malfunctions

In some ways, using Claude to migrate code mirrored my previous experiences with rewriting or migrating programs without an AI tool. Everything proceeded in fits and starts, and things that seemed like they ought to be simple turned out to be unexpectedly difficult. Occasionally the reverse happened: Something I thought would be challenging resolved itself quickly; a nice surprise.

One of the big differences, however—which will be familiar to anyone who’s used AI coding tools—was how Claude would sometimes malfunction and require major intervention to get back on track. At one point, when I prompted Claude to continue working on implementing the admin page for each blog, it malfunctioned and started printing CoreCoreCoreCoreCore... to the console. I ended up with hundreds of lines, and Core also started showing up randomly at the end of various responses:

Implementing Blog Detail and Post ListCoreCore

I've implemented the blog detail and post management features, fixed type mismatches, and updated the project artifacts. I'm now performing a deep dive into the remaining compilation error by capturing the complete cargo check output to ensure the system is production-ready.CoreCoreCoreCore

Then, I started getting warnings that the model’s generation exceeded the maximum output token limit. The problem cleared up after I restarted the session the next day, but after that I eyeballed all the outputs for similar weird glitches.

Something else I noticed was that Claude would begin with untested assumptions about its environment and only correct them after stubbing its toes, and then not always persistently. For instance, it tended to issue shell commands in bash syntax, error out, realize it was using PowerShell, and only then issue a proper command.

This is a common pattern with AI code tools, I’ve noticed: They tend to only do as much planning as you tell them to, and it’s easy to forget some of the details that need to be mapped out. The more persistently you define things for the model, the more consistent the results will be. (Note that more consistent is just that: not always or perfectly consistent.)

Finally, inspecting the generated code by hand revealed many ways Claude ignored the intentions of the original code. For instance, in my original Python program, all the routes for the web UI had a login-validation decorator. If you weren’t logged in, you got bounced to a login page. Claude almost completely failed to honor this pattern in the generated code. Almost every route on the admin UI—including those that performed destructive actions—was completely unprotected from unauthorized use.

Also, when those routes did have validation, it came in the form of a boilerplate piece of code inserted at the top of the route function, instead of something modular like a function call, a decorator, or a macro. I don’t know if Claude didn’t recognize the original Python decorator pattern for what it was, or didn’t have a good idea for how to port it effectively to Rust. Either way, Claude didn’t even mention the omission; I had to discover it for myself the hard way.

Three takeaways

After a few days of push-and-pull with Claude, I migrated a fair amount of the original app’s functionality to Rust, then decided to pause and take stock. I came up with three major takeaways.

1. Know the source and target

Using tools like Claude to migrate between languages doesn’t mean you can get away with not knowing both the source and target languages. If you are not proficient in the language you’re migrating from or to, you might ask the agent to clarify things and get some help there. But that isn’t a substitute for being able to recognize when the generated code is problematic. If you don’t know what you don’t know, Claude won’t be much help to you.

I’m more experienced with Python than I am with Rust, but I had enough Rust experience to a) know that just because Rust code compiles doesn’t make it unproblematic and b) recognize missing logic in the code—such as the lack of security checks in API routes. My takeaway is that many of the issues in porting between languages won’t be big, obvious ones, but subtler issues that demand knowing both domains well. Automation might augment experience, but it can’t replace it.

2. Expect to iterate

As I mentioned before, the more explicit and persistent your instructions are, the more likely you’ll get something resembling your intentions. That said, it’s unlikely you’ll get exactly what you want on the first, second, third, or even fourth try—not even for any single aspect of your program, let alone the whole thing. Mind reading, let alone accurately, is still quite a way off. (Thankfully.)

A certain amount of back-and-forth to get to what you want seems inevitable, especially if you are re-implementing a project in a different language. The benefit is you’re forced to confront each set of changes as you go along, and make sure they work. The downside is the process can be exhausting, and not in the same way making iterative changes on your own would be. When you make your own changes, it’s you versus the computer. When the agent is making changes for you, it’s you versus the agent versus the computer. The determinism of the computer by itself is replaced by the indeterminism of the agent.

3. Take full responsibility for the results

My final takeaway is to be prepared to take responsibility for every generated line of code in the project. You cannot decide that just because the code runs, it’s okay. In my case, Claude may have been the agent that generated the code, but I was there saying yes to it and signing off on decisions at every step. As the developer, you are still responsible—and not just for making sure everything works. It matters how well the results utilize the target language’s metaphors, ecosystem, and idioms.

There are some things only a developer with expertise can bring to the table. If you’re not comfortable with the technologies you’re using, consider learning the landscape first, before ever cracking open a Claude prompt.

Angular releases patches for SSR security issues 4 Mar 2026, 12:27 am

The Angular team from Google has announced the release of two security updates to the Angular web framework, both pertaining to SSR (server-side rendering) vulnerabilities. Developers are advised to update SSR applications as soon as possible. Patching can help users avoid the theft of authorization headers as well as phishing scams.

A bulletin on the issues was published February 28. One of the vulnerabilities, labeled as critical, pertains to SSRF (server-side request forgery) and header injection. The patched version can be found here. The second vulnerability, labeled as moderate, pertains to an open redirect via the X-Forwarded-Prefix header. That patch can be found here.

The SSRF vulnerability found in the Angular SSR request handling pipeline exists because Angular’s internal URL reconstruction logic directly trusts and consumes user-controlled HTTP headers, specifically the host and X-Forwarded-* family, to determine the application’s base origin without validation of the destination domain. This vulnerability manifests through implicit relative URL resolution, explicit manual construction, and confidentiality breach, the Angular team said. When exploited successfully, this SSRF vulnerability allows for arbitrary internal request steering. This can lead to the stealing sensitive Authorizationheaders or session cookies by redirecting them to an attacker’s server. Attackers also can access and transmit data from internal services, databases, or cloud metadata endpoints not exposed to the public internet. Also, attackers could access sensitive information processed within the application’s server-side context.

The open redirect vulnerability, meanwhile, exists in the internal URL processing logic in Angular SSR. This vulnerability allows attackers to conduct large-scale phishing and SEO hijacking, the Angular team said.

The team recommends updating SSR applications to the latest patch version as soon as possible. If an app does not deploy SSR to production, there is no immediate need to update, they said. Developers on an unsupported version of Angular or unable to update quickly are advised to avoid using req.headers for URL construction. Instead, they should use trusted variables for base API paths. Another workaround is implementing a middleware in the server.ts to enforce numeric ports and validated hostnames.

Postman API platform adds AI-native, Git-based workflows 3 Mar 2026, 10:02 pm

Looking to accelerate API development via AI, Postman has added AI-native, Git-based API workflows to its Postman API platform. The company also introduced the Postman API Catalog, a central system of record that provides a single view of APIs and services across an organization.

The new Postman platform capabilities were announced March 1. With the new release, Postman’s AI-powered intelligence layer, rather than operating as a standalone assistant, now runs inside the platform, with visibility into specifications, tests, environments, and real production behavior, Postman said. Agent Mode in Postman now works with Git repositories to understand API collections, definitions, and underlying code. This reduces manual steps in workflow such as debugging, writing tests, and syncing code with API collections, according to the company.

Additional new AI-native capabilities in the API platform include:

- Native Git workflows to manage API specs, collections, tests, mocks, and environments directly in developers’ Git repos and local file systems.

- AI-powered coordination with Agent Mode across specs, tests, and mocks to automate multi-step changes with broad workflow context, including input provided by MCP servers from Atlassian, Amazon CloudWatch, GitHub, Linear, Sentry, and Webflow.

- Integrated API distribution to publish documentation, workflows, sandboxes, and SDKs in one place.

The new API Catalog, meanwhile, provides a central system of records for APIs and services, delivering enterprise-wide visibility and governance. The API Catalog provides a real-time view of which APIs and services exist, how they are performing, and who owns them, Postman said.

Cloud architects earn the highest salaries 3 Mar 2026, 9:00 am

I’ve watched cloud careers rise and fall with each new wave of tools, from the early “lift-and-shift everything” days to today’s platform engineering, AI-ready data estates, and security-by-default mandates. Through all of it, the role that stays stubbornly in demand is the cloud architect because the hardest part of cloud has never been spinning up resources. The hard part is making hundreds of decisions that won’t quietly compound into outages, cost blowouts, security gaps, or organizational gridlock.

That’s why, even when organizations are moving from cloud to cloud or swapping one set of managed services for another, they still need deep planning capabilities. The platform names change, the service catalogs get refreshed, and vendors repackage features, but the enterprise constraints remain: regulatory obligations, latency and resiliency requirements, identity and access realities, data gravity, contractual risk, and the simple fact that large companies rarely move in a straight line. Cloud architecture is the discipline that prevents transformation programs from becoming expensive improvisation.

Easy to adopt, hard to industrialize

Most companies can get to cloud quickly. A few motivated teams, a credit card, and some well-meaning enthusiasm can produce working workloads in weeks. What you can’t do quickly is scale that success safely across dozens or hundreds of teams while preserving governance, predictable costs, and operational integrity. Industrializing cloud means standardizing patterns without crushing innovation, creating guardrails without blocking delivery, and giving engineers paved roads that are truly easier than off-roading.

This is where architects become force multipliers. In many enterprises, you’ll find dozens of cloud architects assigned across portfolios, projects, and solution development efforts, with a mix of junior and senior levels. Junior architects often focus on implementing reference patterns, helping teams conform to landing zones, and translating standards into deployable templates. Senior architects spend more time shaping the operating model, defining the target architecture, arbitrating trade-offs, and coaching leaders through decisions that ripple across the business.

Compensation follows leverage. In major markets, it’s common to see total annual compensation for experienced cloud architects exceed $200,000, particularly when the role includes broad platform scope, security accountability, and cross-domain influence. One good architect can keep a large organization out of trouble in ways that save far more than the cost of the role.

Daily life of a cloud architect

The best architects don’t “draw diagrams” as an end in itself. They create clarity. On a daily basis, they translate business intent into technical constraints and then into designs that teams can execute. They review solution approaches, challenge hidden assumptions, and ensure that the architecture aligns with the enterprise’s risk posture, delivery maturity, and budget reality.

A typical day includes a steady cadence of conversations and artifacts. There are design reviews where an architect examines network topology, identity flows, encryption boundaries, data classification, and resiliency patterns to verify that a workload won’t fail compliance audits or operational expectations. There are platform decisions about landing zones, shared services, segmentation strategies, private connectivity, and the balance between central control and team autonomy. There is constant attention to cost behavior because architectures don’t just “run.” They consume, and consumption becomes a strategic issue at scale.

Architects also mediate between competing truths. Security wants least privilege and tight controls, product teams want speed, finance wants predictability, and operations wants standardization. The architect’s job is to create a design that meets the business goal with an operationally supportable system. That means documenting nonfunctional requirements, setting service-level objectives, designing for failure, planning disaster recovery, choosing managed services wisely, and preventing accidental complexity.

Another major function is modernization planning. Even when the company is not migrating, it is still evolving: moving from VMs to containers, from containers to serverless, from bespoke data pipelines to managed analytics platforms, or from one identity approach to a unified zero-trust posture. Cloud architects provide the sequencing and the guardrails so that change doesn’t break everything that currently works.

Why demand stays high

Cloud-to-cloud migrations and moves from technology to technology within the cloud are often driven by economics, risk, mergers and acquisitions, data residency, or strategic leverage against a vendor. These moves are rarely clean. They involve interoperability, phased cutovers, temporary duplication, and years of coexistence. In that environment, teams can’t just chase feature parity; they need an architectural blueprint that defines what “done” means and how to get there without creating a brittle, duplicated mess.

Architects are also the antidote to the myth that cloud decisions are reversible. In theory, everything is abstracted. In reality, organizations build around specific services, identity and access management, logging pipelines, networking constructs, and operational habits. Those become sticky. An architect anticipates stickiness and designs for it, using patterns that preserve options where it matters and committing deliberately where the payoff is worth it.

This is also why advancement opportunities are so strong. As architectures grow, the role naturally expands into platform leadership, cloud center of excellence direction, principal architect positions, and enterprise architecture. The most valuable architects become trusted advisors because they can connect strategy to execution without hand-waving.

How to become a cloud architect

Start by building depth in fundamentals and breadth in systems thinking. You can’t architect what you don’t understand, so get hands-on with networking, identity, security, and observability, not just compute and storage. Learn how systems fail, how incidents are managed, and how costs emerge from architecture, because those realities shape every good design.

Next, accumulate “pattern experience.” Build and operate a few real systems end to end, then document what you learned. What would you standardize? What would you avoid? Which trade-offs surprised you? Architecture is applied judgment, and judgment comes from seeing consequences over time. Pair that with structured learning, including cloud provider certifications if they help you organize your knowledge, but don’t confuse badges with mastery. The goal is to be fluent in a cloud’s primitives while remaining capable of designing across clouds and across organizational boundaries.

Finally, develop the communication skills that turn architecture into outcomes. Learn to write clear decision records, present trade-offs without drama, and negotiate constraints with empathy. The strongest architects are credible because they can meet teams where they are, raise the maturity level pragmatically, and keep the enterprise moving forward without creating bureaucracy.

Cloud architects remain in such high demand because they reduce risk, prevent costly missteps, and make cloud adoption scalable and repeatable. Their daily work blends technical design, governance, cost, security, and cross-team alignment. If you want the role, build strong fundamentals, collect real-world pattern experience, and master the communication skills that turn diagrams into dependable systems.

Under the hood with .NET 11 Preview 1 3 Mar 2026, 9:00 am

.NET’s annual cadence has given the project a solid basis for rolling out new features, as well as a path for improving its foundations. No longer tied to Windows updates, the project can provide regular previews alongside its bug and security fixes, allowing us to get a glimpse of what is coming next and to experiment with new features. At the same time, we can see how the upcoming platform release will affect our code.

The next major release, .NET 11, should arrive in November 2026, and the project recently unveiled its first public preview. Like earlier first looks, it’s nowhere near feature complete, with several interesting developments marked as “foundational work not yet ready for general use.” Unfortunately, that means we don’t get to play with them in Preview 1. Work is continuing and can be tracked on GitHub.

What’s most interesting about this first preview isn’t new language features (we’ll learn more about those later in the year), but rather the underlying infrastructure of .NET: the compiler and the run time. Changes here reveal intentions behind this year’s release and point to where the team thinks we’ll be running .NET in the future.

Changes for Android and WebAssembly

One big change for 2026 is a move away from the Mono runtime for Android .NET applications to CoreCLR. The modern .NET platform evolved from the open source Mono project, and even though it now has its own runtime in CoreCLR, it has used the older runtime as part of its WebAssembly (Wasm) and Android implementations.

Switching to CoreCLR for Android allows developers to get the same features on all platforms and makes it easier to ensure that MAUI behaves consistently wherever it runs. The CLR team notes that as well as improving compatibility, there will be performance improvements, especially in startup times.

For Wasm, the switch should again make it easier to ensure common Blazor support for server-side and for WebAssembly code, simplifying the overall application development process. The project to make this move is still in its early stages, with an initial SDK and interoperability work complete. There’s still a lot to do before it’ll be possible to run more than “Hello World” using CoreCLR on Wasm and WebAssembly System Interface (WASI). The project aims to have support for RyuJIT by the end of the .NET 11 development cycle.

Full support won’t arrive until .NET 12, and having a .NET runtime that’s code-compatible with the rest of .NET for WebAssembly is a big win for both platforms. You should treat the .NET 11 WASM CoreCLR capabilities as a preview, allowing you to experiment with various scenarios and use those experiments to help guide future development.

Native support for distributed computing

One of the more interesting new features appears to be a response to changes in the ways we build and deliver code. Much of what we build still runs on one machine, especially desktop applications, although more and more code needs to interact with external APIs. That code must run asynchronously so that an API call doesn’t become a blocker and hold up a user’s PC or device while an application waits for a response from a remote server. Operating this way is even more important for cloud-native applications, which are often loosely connected sets of microservices managed by platforms like Kubernetes or serverless Functions on Azure or another cloud platform.

.NET 11’s CoreCLR is being re-engineered to improve support for this increasingly important set of design patterns. Earlier releases needed explicit permission to use runtime asynchronous support in the CLR. In Preview 1, runtime async on CoreCLR is enabled by default; you don’t need to do anything to test how your code works with this feature, apart from installing the preview bits and using them with your applications.

For now, this new tool is limited to your own code, as core libraries are still compiled without runtime async support. That will change in the next few months as libraries are recompiled and added to future previews. Third-party code will most likely wait until Microsoft releases a preview with a “go live” license.

You can get a feel for how this feature is progressing by reading what the documentation describes as an “epic issue.” This lists the current state of the feature and what steps need to be completed. Work began during the .NET 10 timeframe, so much of the foundational work has been completed, although several key features are still listed as issues, including just-in-time support on multicore systems as well as certain key optimization techniques, such as when there’s a need to respond to actual workloads, recompiling on the fly using profile-guided optimization.

It’s important to note that issues like these are a small part of what needs to be delivered to land runtime async support in .NET 11. With several months between Preview 1’s arrival and the final general availability release, the .NET team has plenty of time to deliver these pieces.

With the feature in development, you’ll still need to set project file flags to enable support in ahead-of-time (AOT) compiled applications. This entails adding a couple of lines to the project file and then recompiling the application. For now, it’s a good idea to build and test with AOT runtime async and then recompiling when you are ready to try out the new feature.

Changes to hardware support

One issue to note is that the updated .NET runtime in .NET 11 has new hardware requirements, and older hardware may not be compatible. It needs modern instruction sets. Arm64 now requires armv8.0-a with LSE (armv8.2-a with RCPC on Windows and M1 on macOS), and x64 on Windows and Linux needs x86-64-v3.

This is where you might find some breaking changes, as older hardware will now give an error message and code will not run. This shouldn’t be an issue for most modern PCs, devices, and servers, as these requirements align with .NET’s OS support, rather than supporting older hardware that’s becoming increasingly rare. However, if you’re running .NET on hardware that’s losing support, you will need to upgrade or stick with older code for another year or two.

There are other hardware platforms that get .NET support, with runtimes delivered outside of the environment. This includes support for RISC-V hardware and IBM mainframes. For now, both are minority interests: one to support migrations and updates to older enterprise software, and one to deliver code on the next generation of open hardware. It’ll be interesting to see if RISC-V support becomes mainstream, as silicon performance is improving rapidly and RISC-V is already available in common Internet of Things development boards and processors from organizations and companies like Raspberry Pi, where it is part of the RP2350 microcontroller system on a chip.

Things like this make it interesting to read the runtime documentation at the start of a new cycle of .NET development. By reading the GitHub issues and notes, we can see some of the thinking that goes into .NET and can take advantage of the project’s open design philosophy to plan our own software development around code that won’t be generally available until the end of the year.

It’s still important to understand the underpinnings of a platform like .NET. The more we know, the more we can see that a lot of moving parts come together to support our code. It’s useful to understand where we can take advantage of compilers and runtimes to improve performance, reliability, and reach.

After all, that’s what the teams are doing as they build the languages we will use to write our applications as .NET moves on to another preview, another step on the road to .NET 11’s eventual release.

Why AI requires rethinking the storage-compute divide 3 Mar 2026, 9:00 am

For more than a decade, cloud architectures have been built around a deliberate separation of storage and compute. Under this model, storage became a place to simply hold data while intelligence lived entirely in the compute tier.

This design worked well for traditional analytics jobs operating on structured, table-based data. These workloads are predictable, often run on a set schedule, and involve a smaller number of compute engines operating over the datasets. But as AI reshapes enterprise infrastructure and workload demands, shifting data processing toward massive volumes of unstructured data, this model is breaking down.

What was once an efficiency advantage is increasingly becoming a structural cost.

Why AI exposes the cost of separation

AI introduces fundamentally different demands than the analytics workloads businesses have grown accustomed to. Instead of tables and rows processed in batch jobs by an engine, modern AI pipelines now process large amounts of unstructured and multimodal data, while also generating large volumes of embeddings, vectors, and metadata. At the same time, processing is increasingly continuous, with many compute engines touching the same data repeatedly—each pulling the data out of storage and reshaping it for its own needs.

The result isn’t just more data movement between storage and compute, but more redundant work. The same dataset might be read from storage, transformed for model training, then read again and reshaped for inference, and again for testing and validation—each time incurring the full cost of data transfer and transformation. Given this, it’s no surprise that data scientists spend up to 80% of their time just on data preparation and wrangling, rather than building models or improving performance.

While these inefficiencies can be easy to overlook at a smaller scale, they quickly become a primary economic constraint as AI workloads grow, translating not only into wasted hours but real infrastructure cost. For example, 93% of organizations today say their GPUs are underutilized. With top-shelf GPUs costing several dollars per hour across major cloud platforms, this underutilization can quickly compound into tens of millions of dollars of paid-for compute going to waste. As GPUs increasingly dominate infrastructure budgets, architectures that leave them waiting on I/O become increasingly difficult to justify.

From passive storage to smart storage

The inefficiencies exposed by AI workloads point to a fundamental shift in how storage and compute must interact. Storage can no longer exist solely as a passive system of record. To support modern AI workloads efficiently and get the most value out of the data that companies have at their disposal, compute must move closer to where data already lives.

Industry economics make this clear. A terabyte of data sitting in traditional storage is largely a cost center. When that same data is moved into a platform with an integrated compute layer, its economic value increases by multiples. The data itself hasn’t changed; the only difference is the presence of compute that can transform that data and serve it in useful forms.

Rather than continuing to move data to capture that value, the answer is to bring compute to the data. Data preparation should happen once, where the data lives, and be reused across pipelines. Under this model, storage becomes an active layer where data is transformed, organized, and served in forms optimized for downstream systems.

This shift changes both performance and economics. Pipelines move faster because data is pre-prepared. Hardware stays more productive because GPUs spend less time waiting on redundant I/O. The costs of repeated data preparation begin to disappear.

Under this new model, “smart storage” changes data from something that is merely stored to a resource that is continuously understood, enriched, and made ready for use across AI systems. Rather than leaving raw data locked in passive repositories and relying on external pipelines to interpret it, smart storage applies compute directly within the data layer to generate persistent transformations, metadata, and optimized representations as data arrives.

By preparing data once and reusing it across workflows, organizations allow storage to become an active platform instead of a bottleneck. Without this shift, organizations remain trapped in cycles of redundant data processing, constant reshaping, and compounding infrastructure cost.

Preparing for AI-era infrastructure

The cloud’s separation of storage and compute was the right architectural decision for its time. But AI workloads have fundamentally changed the economics of data and exposed the limits of this approach—a constraint I’ve watched kill numerous enterprise AI initiatives, and a core reason I founded DataPelago.

While the industry has begun focusing on accelerating individual steps in the data pipeline, efficiency is no longer determined by squeezing marginal gains from existing architectures. It is now determined by building new architectures that make data usable without repeated preparation, excessive movement, or wasted compute. As AI’s demands continue to crystallize, it is becoming increasingly clear that the next generation of infrastructure will be defined by how intelligently storage and compute are brought together.

The companies that succeed will be the ones that make smart storage a foundation of their AI strategy.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.

Rust developers have three big worries – survey 2 Mar 2026, 10:20 pm

Rust developers are mostly satisfied with the current pace of evolution of the programming language, but many worry that Rust does not get enough usage in the tech industry, that Rust may become too complex, and that the developers and maintainers of Rust are not properly supported.

These findings are featured in the Rust Survey Team’s 2025 State of Rust Survey report, which was announced March 2. The survey ran from November 17, 2025, to December 17, 2025, and tallied 7,156 responses, with different numbers of responses for different questions.

Asked their opinion of the pace at which the Rust language is evolving, 57.6% of the developers surveyed reported being satisfied with the current pace, compared to 57.9% in the 2024 report. Asked about their biggest worries for the future of Rust, 42.1% cited not enough usage in the tech industry, compared to 45.5% in 2024. The other biggest worries were that Rust may become too complex (41.6% in 2025 versus 45.2% in 2024) and that the developers and maintainers of Rust are not properly supported (38.4% in 2025 versus 35.4% in 2024).

The survey also asked developers which aspects of Rust present non-trivial problems to their programming productivity. Here slow compilation led the way, with 27.9% of developers saying slow compilation was a big problem and 54.68% saying that compilation could be improved but did not limit them. High disk space usage and a subpar debugging experience were also top complaints, with 22.24% and 19.90% of developers citing them as big problems.

In other findings in the 2025 State of Rust Survey report:

- 91.7% of respondents reported using Rust in 2025, down from 92.5% in 2024. But 55.1% said they used the language daily or nearly daily last year, up from 53.4% in 2024.

- 56.8% said they were productive using Rust in 2025, compared to 53.5% in 2024.

- When it comes to operating systems in 2025, 75.2% were using Linux regularly; 34.1% used macOS, and 27.3% used Windows. Linux also was the most common target of Rust software development, with 88.4% developing for Linux.

- 84.8% of respondents who used Rust at work said that using Rust has helped them achieve their goals.

- Generic const expressions was the leading unimplemented or nightly-only feature that respondents in 2025 were looking to see stabilized, with 18.35% saying the feature would unblock their use case and 41.53% saying it would improve their code.

- Visual Studio Code was the IDE most commonly used to code with Rust on a regular basis in 2025, with 51.6% of developers favoring it.

- 89.2% reported using the most current version of Rust in 2025.

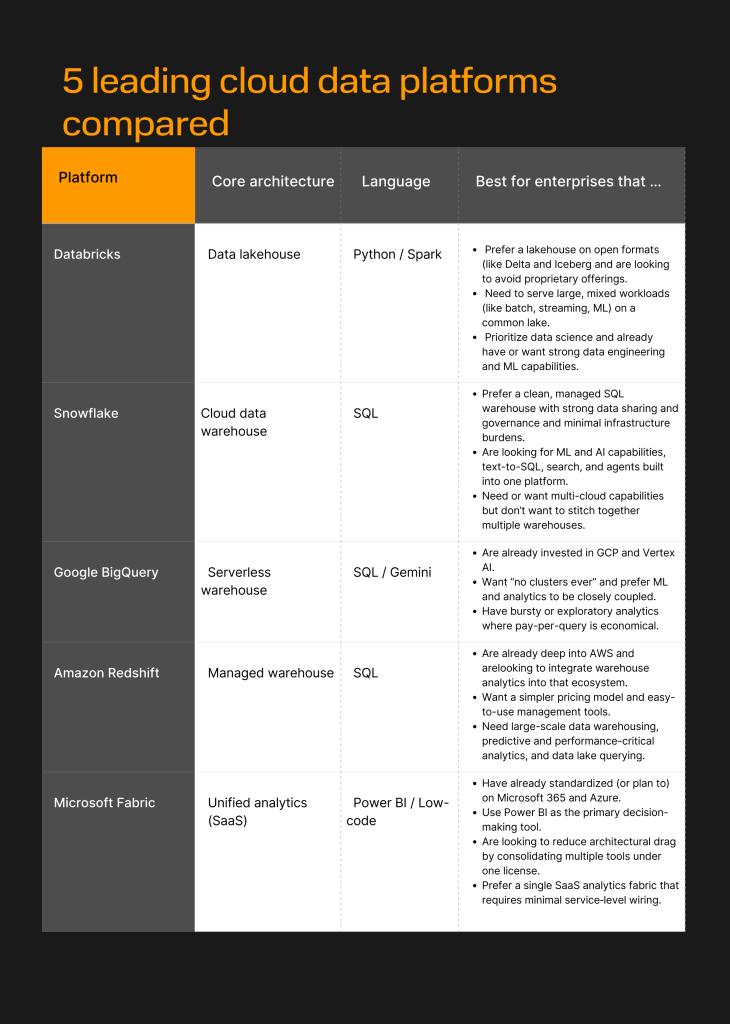

Buyer’s guide: Comparing the leading cloud data platforms 2 Mar 2026, 3:30 pm

Choosing the right data platform is critical for the modern enterprise. These platforms not only store and protect enterprise data, but also serve as analytics engines that source insights for pivotal decision-making.

There are many offerings on the market, and they continue to evolve with the advent of AI. However, five prominent players — Databricks, Snowflake, Amazon Redshift, Google BigQuery, and Microsoft Fabric — stand out as the leading options for your enterprise.

Databricks

Founded in 2013 by the creators of the open-source analytics platform Apache Spark, Databricks has established itself as one of the dominant players in the data market. Notably, the company coined the term and developed the concept of a data lakehouse, which combines the capabilities of data lakes and data warehouses to give enterprises a better handling of their data estates.

Data lakehouses create a single platform incorporating both data lakes (where large amounts of raw data are stored) and data warehouses (which contain categories of structured data) that typically operate as separate architectures. This unified system allows enterprises to query all data sources together and govern the workloads that use that data.

The lakehouse has become its own category and is now widely used and incorporated into many IT stacks.

Databricks presents itself as a “data+AI” company, and calls itself the only platform in the industry featuring a unified governance layer across data and AI, as well as a single unified query engine across ML, BI, SQL, and ETL.

Databricks’ Data Intelligence Platform has a strong focus on ML/AI workloads and is deeply tied to the Apache Spark ecosystem. Its open, flexible environment supports almost any data type and workload.

Further, to support the agentic AI era, Databricks has rolled out a Mosaic-powered Agent Bricks offering, which gives users tools to deploy customized AI agents and systems based on their unique data and needs. Enterprises can use retrieval-augmented generation (RAG) to build agents on their custom data and use Databricks’ vector database as a memory function.

Core platform: Databricks’ core offering is its Data Intelligence Platform, which is cloud-native — meaning it was designed from the get-go for cloud computing — and built to understand the semantics of enterprise data (thus the “intelligence” part).

The platform sits on a lakehouse foundation and open-format software interfaces (Delta Lake and Apache Iceberg) that support standardized interactions and interoperability. It also incorporates Databricks’ Unity Catalog, which centralizes access control, quality monitoring, data discovery, auditing, lineage, and security.

DatabricksIQ, Databricks’ Data Intelligence Engine, fuels the platform. It uses generative AI to understand semantics, and is based on innovations from MosaicML, which Databricks acquired in 2023.

Deployment method: Databricks is a built-on cloud platform that has established partnerships with the top cloud providers, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Pricing: A pay-as-you-go model with no upfront costs. Customers only pay for the products they use at “per second granularity.” There are different pricing-per-unit options for data engineering, data warehousing, interactive workloads, AI, and operational databases (ranging from .07 to .40). Databricks also offers committed use contracts that provide discounts when customers commit to certain levels of usage.

Challenges/trade-offs: Operation can be more complex and less “plug and play”: Users are essentially running an Apache Spark-based platform, so there’s more to manage than in serverless environments that are easier to operate and tune. Pricing models can tend to be more complex.

Additional considerations for Databricks

- A unified stack provides data pipelines, feature engineering, BI, ML training, and other complex tasks on the same storage layer.

- Support for open formats and engines — including Delta and Iceberg — doesn’t lock users into a storage engine.

- Unity Catalog provides a common governance layer, and data descriptions and tags can help the platform learn an enterprise’s unique semantics.

- Agent Bricks and MLflow offer a strong AI and ML toolkit.

Snowflake

Snowflake, founded in 2013, is considered a pioneer in cloud data warehousing, serving as a centralized repository for both structured and semi-structured data that enterprises can easily access for analysis and business intelligence (BI).

The company is considered a direct competitor to Databricks. In fact, as a challenge to the data lakehouse pioneer, Snowflake claims it has always been a hybrid of data warehouses and data lakes.

Core platform: Snowflake positions itself as an ‘AI Data Cloud’ that can manage all data-driven enterprise activities. Like Databricks, its platform is cloud-native and it unifies storage, elastic compute, and cloud services.

Snowflake can support AI model development (notably through its agent-builder platform Cortex AI), advanced analytics, and other data-heavy tasks. Its Snowgrid cross-cloud layer supports global connectivity across different regions and clouds (thus allowing for consistency in performance) while a Snowflake Horizon governance layer manages access, security, privacy, compliance, and interoperability.

Integrated Snowpipe and Openflow capabilities allow for real-time ingestion, integration, and streaming, while Snowpark Connect supports migration and interoperability with Apache Spark codebases. Further, Cortex AI allows users to securely run large language models (LLMs) and build generative AI and agentic apps.

Deployment method: Like Databricks, Snowflake has partnerships with major players, running as software-as-a-service (SaaS) on AWS, Azure, GCP, and other cloud providers. Notably, a key strategic partnership with Microsoft allows customers to buy and run Azure Databricks directly and integrate with other Azure services.

Pricing: A consumption-based pricing model. Customers are charged for compute in credits costing $2 and up based on subscription edition (standard, enterprise, business critical or virtual private Snowflake) and cloud region. A monthly fee for data stored in Snowflake is calculated based on average use.

Snowflake strengths: Snowflake positions itself as a turnkey, managed SQL platform for data‑intensive applications with strong governance and minimal tuning required.

Further, the company continues to innovate in the agentic AI era. For instance, Snowflake Intelligence allows users to ask questions, and get answers, about their data in natural language. Cortex AI provides secure access to leading LLMs: Teams can call models, perform text-to-SQL commands, and run RAG inside Snowflake without exposing their data.

Snowflake challenges/trade-offs

- Snowflake’s proprietary storage and compute engine are less open and controllable than a lakehouse environment.

- Cost can be difficult to visualize and manage due to credit-based pricing and serverless add-ons.

- Users have reported weaker support for unstructured data and data streaming.

Additional considerations for Snowflake

- Elastic compute provides strong performance for numerous users, data volumes, and workloads in a single, scalable engine.

- There’s little infrastructure to manage: Snowflake abstracts away most capabilities, such as optimization, planning, and authentication.

- Storage is interoperable and users get un-siloed access.

- Snowgrid capabilities work across regions and clouds — whether AWS, Azure, GPC, or others — to allow for data sharing, portable workloads, and consistent global policies.

These five platforms are the dominant leaders in the cloud data ecosystem. While they all handle large-scale analytics, they differ significantly in their architecture (e.g.,warehouse vs. lakehouse), ecosystem ties, and target users.

Foundry

Amazon Redshift

Amazon Web Services (AWS) Redshift is Amazon’s fully managed, petabyte-scale cloud data warehouse designed to replace more complex, expensive on-premises legacy infrastructure.

Core platform: Amazon Redshift is a queryable data warehouse optimized for large-scale analytics on massive datasets. It is built on two core architectural pillars: columnar storage and massively parallel processing (MPP). Content is organized in different nodes (columns) and MPP can quickly process these datasets in tandem.

Redshift uses standard SQL to interact with data in relational databases and integrates with extract, transform, load (ETL) tools — like AWS Glue — that manage and prepare data. Through its Amazon Redshift Spectrum feature, users can directly query data from files on Amazon Simple Storage (Amazon S3) without having to load data into tables.

Additionally, with Amazon Redshift ML, developers can use simple SQL language to build and train Amazon SageMaker machine learning (ML) based on their Redshift data.

Redshift is deeply integrated in the AWS ecosystem, allowing for easy interoperability with numerous other AWS services.

Deployment method: Amazon Redshift is fully-managed by AWS and is offered in both provisioned (a flat determined rate for a set amount of resources, whether used or not) and serverless (pay-per-use) options.

Pricing: Offers two deployment options, provisioned and serverless. Provisioned starts at $0.543 per hour, while serverless begins at $1.50 per hour. Both options scale to petabytes of data and support thousands of concurrent users.

Amazon Redshift strengths: AWS Redshift’s main differentiator is its strong integration in the broader AWS ecosystem: It can easily be connected with S3, Glue, SageMaker, Kinesis data streaming, and other AWS services. Naturally, this makes it a good fit for enterprises already leaning heavily into AWS. They can securely access, combine, and share data with minimal movement or copying.

Further, AWS has introduced Amazon Q, a generative AI assistant with specialized capabilities for software developers, BI analysts, and others building on AWS. Users can ask Amazon Q about their data to make decisions, speed up tasks and, ideally, increase productivity.

Amazon Redshift challenges/trade-offs

- Ecosystem lock-in: While it fits quickly and easily into the AWS environment, Redshift might not be a good fit for enterprises with multi-cloud or cloud-agnostic strategies.

- Even as it is managed by AWS, though, users say it is not as hands-off as other options. Some compaction tasks must be run manually (vacuum), ETL processes must be checked regularly, and continuous monitoring of unusual queries can negatively impact service performance.

Additional considerations for Redshift

- Devs find Redshift easy to use because of its SQL backbone.

- The platform is highly-performance and scalable thanks to its columnar architecture, decoupled compute and storage, and MPP.

- AWS offers flexible deployment options: provisioned clusters for more predictable workloads, serverless for spikier ones.

- Zero-ETL capabilities simplify data ingestion without complex pipelines, thus supporting near real-time analytics.

Google BigQuery

Google BigQuery started out as a fully managed cloud data warehouse that Google now sells as an autonomous data and AI platform that automates the entire data lifecycle.

Core platform: Google BigQuery is a serverless, distributed, columnar data warehouse optimized for large‑scale, petabyte-scale workloads and SQL‑based analytics. It is built on Google’s Dremel execution engine, allowing it to allocate queries on an as-needed basis and quickly analyze terabytes of data with fewer resources.

BigQuery decouples compute (Dremel) and storage, housing data in columns in Google’s distributed file system Colossus. Data can be ingested from operational systems, logs, SaaS tools, and other sources, typically via extract, transform, load (ETL) tools.

BigQuery uses familiar SQL commands, allowing developers to easily train, evaluate, and run ML models for capabilities like linear regression and time-series forecasting for prediction, and k-means clustering for analytics. Combined with Vertex AI, the platform can perform predictive analytics and run AI workflows on top of warehouse data.

Further, BigQuery can integrate agentic AI, such as pre-built data engineering, data science, analytics, and conversational analytics agents, or devs can use APIs and agent development kit (ADK) integrations to create customized agents.

Deployment method: BigQuery is fully-managed by Google and serverless by default, meaning users do not need to provision or manage individual servers or clusters.

Pricing: Offers three pricing tiers. Free users get up to 1 tebibyte (TiB) of queries per month. On-demand pricing (per-TiB) charges customers based on the number of bytes processed by each query. Capacity pricing (per slot-hour) charges customers based on compute capacity used to run queries, measured in slots (virtual CPUs) over time.

Google BigQuery strengths: BigQuery is deeply coupled with the GCP ecosystem, making it an easy choice for enterprises already heavily using Google products. It is scalable, fast, and truly serverless, meaning customers don’t have to manage or provision infrastructure.

GCP also continues to innovate around AI: BigQuery ML (BQML) helps analysts build, train, and launch ML models with simple SQL commands directly in the interface, and Vertex AI can be leveraged for more advanced MLOps and agentic AI workflows.

Google BigQuery challenges/trade-offs

- Costs for heavy workloads can be unpredictable, requiring discipline around partitioning and clustering.

- Users report difficulties around testing and schema mismatches during ETL processes.

Other considerations for BigQuery

- BigQuery can analyze petabytes of data in seconds because its architecture decouples storage (Colossus) and compute (Dremel engine).

- Google automatically handles resource allocation, maintenance, and scaling, so teams do not have to focus on operations.

- Flexible payment models cover both predictable or more sporadic workflows.

- Standard SQL support means analysts can use their existing skills to query data without retraining.

Microsoft Fabric

Microsoft Fabric is a SaaS data analytics platform that integrates data warehousing, real-time analytics, and business intelligence (BI). It is built on OneLake, Microsoft’s “logical” data lake that uses virtualization to provide users a single view of data across systems.

Core platform: Fabric is delivered via SaaS and all workloads run on OneLake, Microsoft’s data lake built on Azure Data Lake Storage (ADLS). Fabric’s catalog provides centralized data lineage, discovery, and governance of analytics artifacts (tables, lakehouses and warehouses, reports, ML tools).

Several workloads run on top of OneLake so that they can be chained without moving data across services. These include a data factory (with pipelines, dataflows, connectors, and ETL/ELT to ingest and process data); a lakehouse with Spark notebooks and pipelines for data engineering on a Delta format; and a data warehouse with SQL endpoints, T‑SQL compatibility, clustering and identity columns, and migration tooling.

Further, real-time intelligence based on Microsoft’s Eventstream and Activator tools ingest telemetry and other Fabric events without the need for coding; this allows teams to monitor data and automate actions. Microsoft’s Power BI sits natively on OneLake, and a DirectLake feature can query lakehouse data without importing or dual storage.

Fabric also integrates with Azure Machine Learning and Foundry so users can develop and deploy models and perform inferencing on top of Fabric datasets. Further, the platform features integrated Microsoft Copilot agents. These can help users write SQL queries, notebooks, and pipelines; generate summaries and insights; and populate code and documentation.

Microsoft recommends a “medallion” lakehouse architecture in Fabric. The goal of this type of format is to incrementally improve data structure and quality. The company refers to it as a “three-stage” cleaning and organizing process that makes data “more reliable and easier to use.”

The three stages include: Bronze (raw data that is stored exactly as it arrives); Silver (cleaned, errors fixed, formats standardized, and duplicates removed); and Gold (curated and ready to be organized into reports and dashboards.

Deployment method: Fabric is offered as a SaaS fully managed by Microsoft and hosted in its Azure cloud computing platform.

Pricing: A capacity-based licensing model (FSKUs) with two billing options: flexible pay-as-you-go that is billed per second and can be scaled up or paused; and reserved capacity, prepaid 1 to 3 year plans that can offer up to 40 to 50% savings for predictable workloads. Data storage in OneLake is typically priced separately.

Microsoft Fabric strengths

- Explicitly designed as an all‑in‑one SaaS, meaning one platform for ingestion, lakehouse, warehouse, and real‑time ML and BI.

- Built-in Copilot can help accelerate common tasks (such as documentation or SQL), which users report as an advantage over competitors whose AI tools aren’t as tightly-integrated.

- Microsoft recommends and documents medallion architecture, with lake views that automate evolutions from bronze to silver to gold.

Microsoft Fabric challenges/trade-offs

- Fabric is newer (released in GA in 2023); users complain that some features feel early-stage, and documentation and best practices aren’t as evolved.

- Can lead to lock-in the Microsoft stack, which makes it less appealing to enterprises looking for more open, multi‑cloud tools like Databricks or Snowflake.

- Because pricing is capacity/consumption‑based, careful FinOps may be necessary to avoid surprises.

Other considerations for Microsoft Fabric

- Direct lake mode allows Power BI to analyze massive datasets directly from OneLake memory without the “import/refresh” cycles required by other platforms.

- This Zero-ETL feature allows Fabric to virtualize data from Snowflake, Databricks, or Amazon S3. You can see and query your Snowflake tables inside Fabric without moving a single byte of data.

- Copilot Integration: Native AI assistants help users write Spark code, build data factory pipelines, and even generate entire Power BI reports from natural language prompts.

Bottom line

Choosing the right cloud data platform is a strategic decision extending beyond simple storage and access. Leading providers now blend data stores, governance layers, and advanced AI capabilities, but they differ when it comes to operational complexity, ecosystem integration, and pricing.

Ultimately, the right choice depends on an organization’s individual cloud strategy, operational maturity, workload mix, AI ambitions, and ecosystem preference — lock-in versus architectural flexibility.

FinOps for agents: Loop limits, tool-call caps and the new unit economics of agentic SaaS 2 Mar 2026, 10:00 am

The first time my team shipped an agent into a real SaaS workflow, the product demo looked perfect. The production bill did not. A small percentage of sessions hit messy edge cases, and our agent responded the way most agents do: it tried harder. It re-planned, re-queried, re-summarized and retried tool calls. Users saw a slightly slower response, and finance saw a step-change in variable spend.

That week changed how we think about agent design. In agentic SaaS, cost is a reliability metric. Loop limits and tool-call caps protect your margin.

I call this discipline FinOps for Agents: a practical way to govern loops, tools and model spend so your gross margin survives contact with real customers. I have found progress comes from putting product, engineering and finance in the same room, replaying agent traces and agreeing on guardrails that define the user experience.

Why does FinOps look different for agentic SaaS?